Operators are used in Airflow to define workflows in a modular and reusable way. The modularity of Airflow allows users to customize the components to meet their specific needs and integrate them with other tools in their data processing pipeline. Overall, the Airflow architecture is designed to be flexible and scalable, allowing it to handle large-scale data processing workflows. Tasks can be dependent on other tasks, allowing for complex workflows.

Airflow dag dependency code#

DAGs are defined in Python code and typically contain multiple tasks that are executed in a specific order. Airflow supports several message brokers, including Redis, RabbitMQ, and Apache Kafka.ĭAGs: Airflow DAGs (Directed Acyclic Graphs) define the workflows and tasks to be executed. Message broker: Airflow uses a message broker to communicate between the scheduler and executor. Airflow supports several databases, including PostgreSQL, MySQL, and SQLite. CeleryExecutor is recommended when running Airflow on a larger scale, as it provides better scalability and fault tolerance.ĭatabase: Airflow stores its metadata and task execution logs in a backend database. It uses Celery as the message broker and task queue system. It is more efficient than SequentialExecutor as it can execute tasks in parallel, but it still has limitations in terms of scalability and fault tolerance.ĬeleryExecutor: CeleryExecutor is a distributed executor that allows Airflow to run tasks on a distributed system, such as a cluster of servers or a cloud-based environment. LocalExecutor is recommended when running Airflow on a single machine with limited resources. It is useful when you have limited resources and only a small number of tasks to execute. LocalExecutor: LocalExecutor is an executor that runs tasks locally on the Airflow server. It is useful for testing and development, where only a few tasks are involved, and execution order is not critical. SequentialExecutor: SequentialExecutor is the simplest executor and executes tasks in sequential order. Airflow supports several executor types, including LocalExecutor, SequentialExecutor, CeleryExecutor, and KubernetesExecutor.

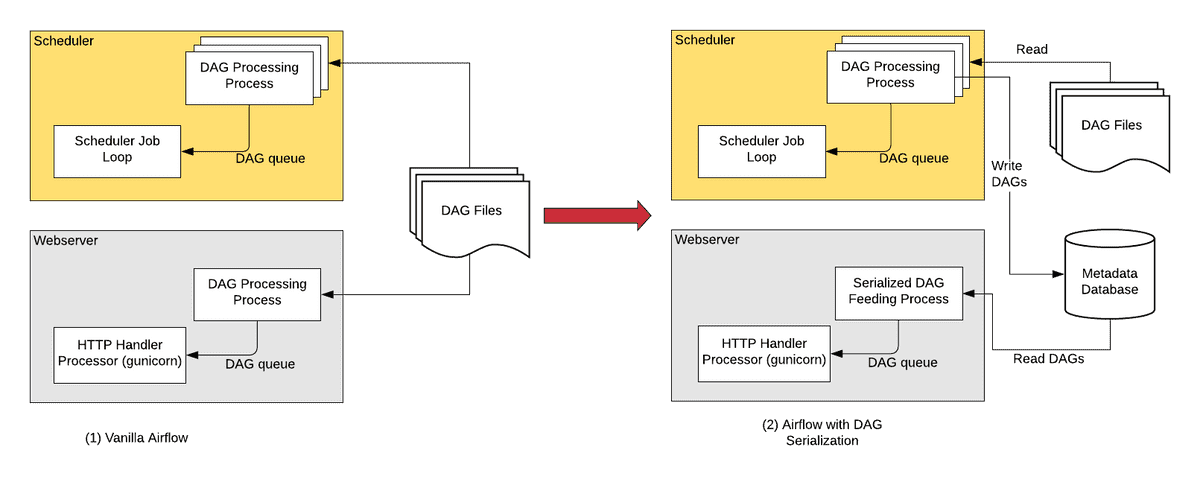

It listens for new tasks to execute and runs them when triggered by the scheduler. The scheduler checks for new tasks to run and creates task instances based on the defined schedule.Įxecutor: The Airflow executor is responsible for executing tasks defined in Airflow DAGs. Scheduler: The Airflow scheduler is responsible for scheduling tasks and triggering task instances to run. It allows users to view and edit DAGs, monitor task progress, and manage task dependencies. Web server: The Airflow web server provides a user-friendly UI for managing workflows and tasks. We’ll explore the key components and interactions that makeup Airflow’s architecture.Īirflow has a modular architecture that consists of several components, each responsible for a specific task. Knowing the architecture of Airflow is crucial for effective workflow orchestration and data pipeline management. We’ll also cover best practices for using Apache Airflow.

Airflow dag dependency how to#

Additionally, the article will explain how to get started with Apache Airflow, including installation and configuration, and how to write DAG code.

In this article, we’ll take you on a journey to explore Apache Airflow’s architecture and components, and its key features. With features like task dependency management and retries, Airflow streamlines workflow management to improve efficiency for teams of any size.Īpache Airflow can transform your data engineering and workflow management processes, automating tasks, monitoring progress, and collaborating with your team all from a single platform. Its Python-based architecture seamlessly integrates with other Python tools and its web-based interface simplifies monitoring and managing workflows. Apache Airflow is a powerful, open-source platform for managing complex data workflows and machine learning tasks. Check it out.Īre you seeking a powerful, user-friendly platform to manage your data workflows? If so, Apache Airflow might be just what you need. Earthly is a powerful tool that can automate and streamline data engineering CI processes.

Airflow dag dependency software#

We simplify and speed up software building with containerization.

0 kommentar(er)

0 kommentar(er)